Architecture

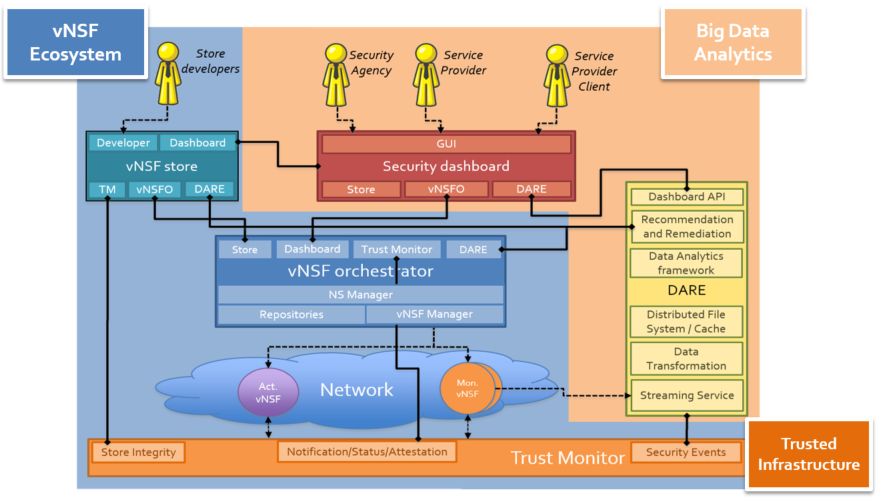

The designed high-level architecture for the SHIELD platform is articulated around different components, illustrated in the figure below.

vNSF Ecosystem

vNSF Store

The store holds a record of Network Services (NS) and Virtual Network Security Functions (vNSF) related information. It allows the developer to onboard the vNSF and NS packages into a catalog that is later accessible to the vNSF Orchestrator.

Any package uploaded is a wrapper of the structure defined by the NFVO (here, OSM). The package contains some extra files with metadata on the package (e.g., the OSM version, hashes of different files, etc). This makes it possible to validate the trustworthiness of other uploaded package.

vNSF Orchestrator

The orchestrator provides interfaces to the different components in the SHIELD platform to operate with components running in the NFVI (that is, the NFVO and SDN controller).

As its main objective, it is able to deploy the Network Services into any given infrastructure and manage its lifecycle. Specifically, it can deploy and undeploy the virtual services into a VIM, as well as start and stop the service running in the VDU.

The orchestrator also allows to configure the Network Services during its instantiation (Day-0), initialisation (Day-1) or during runtime (Day-2). This work is done through the usage of actions -- which are defined in the descriptor and implemented by Canonical's Juju charms. The instantiation and initialisation phases are run by OSM during the deployment. During this phase, the actions defined in the initialisation phase of the descriptor will take place. Finally, at any time during the operation of the Network Service it is possible to carry out any of the available actions.

On the other hand, the orchestrator provides many other features via its REST API (i.e., the vNSFO API). It persists the nodes in the infrastructure (as manually defined initially by the operator of each infrastructure) and uses this knowledge to request both initial and periodic attestation operations on such nodes. In case the attestation determines some untrusted state, the orchestrator will proceed with the predefined actions to revert the status to a trusted one.

Network

The network, in this diagram, is a loose term to encompass the NFVI with the VIM and any ancillary network device, physical server or virtual node that serves the operation of the platform (e.g., an SDN controller), as well as the different vNSFs and NSs that are deployed and managed by the NFVO. This kind of network layer operates at a lower layer than the SHIELD platform and must be therefore defined and prepared beforehand (e.g., multiple VIMs must be interconnected in advance) and externally to the deployment of the SHIELD platform. Similarly, the definition and development of the vNSF and NS packages is alien to the platform and conforms to the structure defined in OSM (the platform provides support for releases TWO, FOUR and FIVE).

Trusted Infrastructure

Trust Monitor

Once deployed, vNSFs and NSs are verified by the Trust Monitor on bootstrap and at runtime (along with the other nodes from the infrastructure, as mentioned above). Therefore, the Trust Monitor assesses their trustworthiness at all times.

Depending on the type of node to verify, this component checks on specific bits of information. For instance, to verify that a switch is in a trusted state, both the i) internal configuration and ii) flows are verified against a trusted set or register. Likewise, to verify the state of a physical host, a set of internal configurations will be checked against the expected state. This is done by leveraging on TC techniques and on the TPM chips.

Big Data Analytics

Data Analysis and Remediation Engine

The DARE is an information-driven Intrusion Detectionn and Prevention System (IDPS) platform that stores and analyses heterogeneous network information, previously collected via monitoring vNSFs. It makes use of cognitive and analytical components capable of predicting specific vulnerabilities and attacks (for instance, Spot).

The processing and analysis of large amounts of data is carried out by using Big Data, data analytics and Machine Learning (ML) techniques. Data and logs from the vNSFs (placed at strategic locations of the network) are processed here. When a threat is detected, and as a product of such processing, the DARE components provide mitigation recipes (suggestions) to different actors (for instance, the network operator) so that these could be carried out manually.

It consists of three main components: the data acquisition and storage module, the data analytics engine and the remediation engine.

-

The data acquisition and storage module enables the ingestion of the data sets and its preparation for further processing. Different types of data collectors and workers (defined by Apache Spot) are in use. Whilst Apache Spot supports the main data sources used by any IDPS (network flows, DNS and web proxy log connection and transformation), the DARE supports netflow. In the SHIELD platform, the ingest chain is appropriately designed to support both centralised collection with a pre-processing architecture (i.e., pushing raw unprocessed data from the vNSFs to the DARE filesystem as part of the anomaly detection process); as well as distributed collections (using agents at the monitoring vNSFs to locally preprocess and filter data before dispatching it to the DARE).

The workers obtain the information from Kafka (by listening to a particular topic of the Kafka cluster) and make these available for later processing with Spot. Supported workers are as follows:

- The streaming worker; consumes the incoming messages on a given topic from Kafka. The streaming data is divided into batches and are deserialized by the worker according to the supported Avro schema, parsed and registered in the corresponding table of Hive

- The simple worker; consumes incoming messages on a given topic/partition from Kafka and stores these into HDFS

- The data analytics engine leverages two different Data Analytics modules: the cognitive Data Analytics module (produces packet and flow analytics via ML techniques and tools like Apache Spot, Spark, Hadoop Distributed File System (HDFS), Kafka and Hive) and the security Data analysis module (receives network flows from the distributed storage system to detect anomalous behaviours related to security issues; and based on a combination of Big Data analytics and Machine Learning techniques to process and analyse a vast amount of network data, as well as to automatically discover and classify cybersecurity threats). The latter was adapted to collaborate with the former, and thus improve the analysis results carried out by SHIELD.

- The remediation engine is fed with i) the analysis from the data analytics modules, and ii) alerts and contextual information. With this, it determines a mitigation plan for the existing threats. To do so, it runs in real-time or near-real-time, generating a cybersecurity topology for a detected threat. That is converted into a high-level abstraction of a remediation recipe. The goal of this component is to output a combination of recommendations and alerts that provide relevant threat details to all interested parties using the dashboard and the direct application of countermeasure activities by triggering specific vNSFs via the vNSFO (e.g. block/redirection of network flows).

Security Dashboard

This graphic interface allow different profiles/roles of users to log in and exert specific operations. Operators can access to monitoring information showing an overview of the security status, and they can also take actions and react to any detected vulnerability. Developers and administrators of the platform can upload/onboard the different vNSF and NS packages, respectively. The dashboard allows to define billing settings per Network Service, which are used to measure and charge operations on the services taken by clients.

Notifications are provided via the dashboard to inform of threat mitigation actions review and application, untrusted nodes alerts and service status.